European

Nuclear Society

e-news

Issue 14 Autumn 2006

http://www.euronuclear.org/e-news/e-news-14/nuclear-imaging.htm

Among the may applications of nuclear energy and ionising radiation, medical imaging certainly is least subject to negative perception or outright opposition from the general public. Proponents of nuclear power correctly refer to it as an example of a very positive use of nuclear technology. Working in the field of medical imaging, it appeared to me that some misunderstandings or confusions exist as to the principles behind the different medical imaging techniques and their potential diagnostic role. This paper, which is a shorter version of an article entitled 'Nuclear Imaging in the Realm of Medical Imaging' (Nuclear Instruments and Methods in Physics Research A 509 (2003) 213–228), gives a general introduction to the subject.

Medical imaging techniques can be classified according to a number of criteria. A particular classification scheme could use appearance, e.g. tomographic versus non-tomographic images and would group CT and MRI because of the similarity in image presentation. Another would classify the techniques according to the underlying physics. This is the classification scheme which is used here. Its basis will be the origin and nature of the radiation source that will carry the information about the patient to a detector.

When the radiation source is external, the body structures modulate the information through interactions with the radiation. In X-ray radiography or CT, an external point source of X-rays is used. The X-rays are partially absorbed when the rays pass through the body. The rays that are neither absorbed nor scattered move in straight lines between the point source and the detector (e.g. film), thus creating a shadow image of the bodily structures.

In ultrasound, an external source of pulsed sound waves is used. Both the time and direction of the pulse is known. Interfaces between different tissues will partially reflect the sound waves. By measuring the time span between the outgoing and incoming sound pulse, images can be reconstructed.

In endoscopy, an external light source illuminates internal organs through glass fibre. An ocular or small camera is used to observe the reflected light and hence the organ.

The body naturally and continuously radiates heat: its source is internal. In order to image the information carriers, some optics for infrared radiation are needed: a thermographic camera.

In MRI, the information is carried by radio waves emitted by hydrogen nuclei in the body. Although the body cxontains plenty of hydrogen nuclei, e.g. in water molecules, the nuclei do not naturally emit radio waves. In order for them to do so, they have first to be put in a magnetic field and then activated by means of well chosen radio wave pulses at specific frequencies. The nuclei then 'answer' by emitting radio waves of similar frequencies. In MRI the internal sources are always present but they only emit information when activated to do so.

In nuclear imaging, the information is carried by gamma rays emitted by internal radioactive tracers. The body is naturally radioactive. For physical reasons due to the nature of the radioactive decay of the radioactive body constituents, imaging them is too difficult to be of any use. Also, from the medical point of view, the information would not be of much help. Therefore, artificial radioactive tracers are administered. They are chosen in such a way that their radioactive decay allows for external detection and that their space/time distribution reflects clinical information.

Because of their particular importance, ultrasound, MRI, radiography and nuclear imaging will be discussed in more detail.

The medical use of ultrasound is a spin-off from Japanese research on sonar. The first US scanners became available in the early fifties and the technique entered widespread clinical practice in the seventies.

The information in ultrasound originates from the reflection of sound waves emitted by an external source, typically a piezoelectric crystal resonance of between 1 and 10 MHz. Refraction, absorption and scattering also play a role, but mainly as factors that degrade the clinical information. The basic physical parameters of importance are the frequency of the wave, the speed of sound v and the density ? of the tissue.

The reflected fraction at a muscle/fat interface is about 1%. At a skin/air interface the reflected fraction becomes 99.9%, hence the use of a gel to decrease this undesired reflection.

Among many others, there are two typical artefacts in ultrasound. The first artefact is due to the coherent nature of the sound wave: the sound wave is a coherent pulse which will interfere with its reflected, refracted and transmitted components to give rise to speckling, similar to the speckling observed in laser light. The second artefact is due to the physics of reflection: interfaces between tissues that are parallel to the wave propagation will not reflect the wave and will therefore not be seen in ultrasound.

For some applications such as obstetrics or cardiology, the clinical information in the images is very high. Furthermore, the technique is safe and relatively inexpensive. Current research tends to eliminate artefacts, improve the image contrast and improve the presentation of the data. Many efforts are directed towards 3D or even 3D + time data acquisitions and representations.

FIGURE 1: 3D ultrasound (© 2000 General Electric, www.gemedicalsystems.com)

The MRI technique stems from physics research carried out by Gorter, Rabi, Purcell, Bloch and many others that led to the discovery and development of nuclear magnetic resonance techniques just before and after world-war II. Medical applications and imaging were introduced in the seventies by, among others, Lauterbur, Damadian and Mansfield.

The basic information in MRI imaging relates to

the magnetisation of hydrogen nuclei (their magnetic moment is called 'spin'), denoted as N(H)

the energy transfer between the spins and tissue, characterised by a time constant T1

the energy redistribution among spins with a time constant T2

flow

Without an external magnetic field, the magnetic moment of the hydrogen nuclei will point at random in all directions. There will be no net magnetisation. In a large external magnetic field, the hydrogen nuclei in tissue will preferentially align their spin (1/2 or –1/2 due to quantum mechanical laws) along the magnetic field. More spins will align their spin in the direction of the field ('spin-up') than in the opposite direction ('spin-down') because the energy in spin-up direction is lower than in spin-down direction. The global energy of the spin system will, therefore, decrease while the magnetisation increases.

This magnetisation implies a transfer of energy from the spin system to another system: the 'lattice', or tissue in the case of MRI. This transfer of energy is characterised by an exponential relaxation law with a time constant T1, also called spin-lattice relaxation time. In typical MRI field strengths (0.5 to 1.5 T), T1 is typically of the order of 0.5 to 2 s, depending on the tissue type.

Next to interacting with the lattice, the spins can also interact among each other: as one spin flips from down to up, another spin can absorb the released energy and flip from up to down. This spin-spin redistribution of energy, internal to the spin system, is also characterised by a relaxation time, called spin-spin relaxation time and noted as T2. Typical values for T 2 are 10 – 100 ms, again depending on the tissue type.

For a typical MRI field strength of 1.5 T the energy difference spin up/down corresponds to radio waves with a resonant frequency of 60 MHz.

By sending radio waves at resonant frequency some spins which were spin-up will absorb the energy of the wave and flip to spin-down, thereby increasing the global energy of the spin system. The energy of the spin system will now no longer be in equilibrium with respect to the tissue temperature and hence violate the normal Boltzmann distribution in equilibrium. The spin system will subsequently re-emit the extra energy as radio waves at resonant frequency. By varying local magnetic fields ('gradients'), fine-tuning the frequency, the polarisation and the duration of radio wave pulses to excite the spin system, and by modulating the delay after which the re-emitted waves (the 'signal') are measured, MRI images can be reconstructed. The contrast in the images then depends on the four following factors: N(H), T1, T2 and flow (any movement of nuclei during the imaging sequences).

The clinical value of MRI images is recognised in a large number of pathologies. Examples are the base of the skull and articulations such as the knee.

FIGURE 2: MRI image (1983) with Fourier reconstruction artefact (bottom folded to top).

Current research tends to widen the scope of information gathered. Examples are magnetic resonance angiography (MRA) to visualise the vascular structure without injection of contrast media, functional MRI to visualise areas of specific brain function, and diffusion imaging. Other ongoing efforts involve the shortening of the acquisition times that used to be tens of minutes and are now between seconds and a few minutes.

MRI is a rather safe technique for both patients and staff. Obvious precautions, such as removing metallic objects that could fly into the magnet due to the very high field strength should be taken. Patients with internal metallic objects such as clips should be excluded from the imaging procedure. The same is true for patients with pacemakers. Most other potential hazards are associated with the generation of heat due to induced currents.

Radiography is imaging with an external X-ray source. X-rays were accidentally discovered but not recognised as such by Goodspeed at the University of Pennsylvania in 1890. It is only after Röntgen's discovery in 1895 that radiography was born. Only weeks after the discovery, medical applications started as illustrated by figure 3.

The imaging process in radiography is based on the detection by film or other adequate detectors of the transmission of X-rays originating in a point source (the X-ray tube). Along their path from source to detector, the X-rays (photons with a mean energy in the range between 15 and 60 keV) undergo photon-matter interactions. Among the four classical interactions, the photoelectric effect, Compton scattering, coherent scattering and pair formation, only the first two are relevant because of the energy range.

The photoelectric effect is the main photon-matter interaction of importance in radiography; it creates the shadow image through absorption by the body structures, and allows the detection of the photons by the detector.

FIGURE 3: First Belgian military radiograph, April 1896.

X-ray film is still the most widely used detector. However, the characteristics of film are such that it is not very sensitive to X-rays. Therefore, a phosphor screen that transforms the X-ray in visible light is put against the film - thereby drastically increasing its sensitivity and allowing a similar decrease in radiation exposure to the patient. Today, large field of view semiconductor detectors gradually replace film.

FIGURE 4: Coronarography of patient with LAD Stenosis

The spectrum of clinical applications of radiography is overwhelming, but inherently limited by the fact that it is a projection technique: the information along the path of the X-ray is integrated and information on changes in absorption along the path is lost in the image. This is the reason why X-ray computed tomography was developed.

Because of the ionising character of X-rays, a real health risk exists. Early radiographers paid a high toll as victims of radiation induced illnesses such as leukaemia.

The loss of information due to the projection of a shadow in classical radiography limits its clinical value. Several methods have been devised in order to overcome this loss: tomography through blurring of out-of-focus structures by moving the X-ray source and film in opposed directions, stereoscopic views etc... The advent of powerful data processing allowed for new approaches and in 1972 Hounsfield introduced Computed Tomography (CT) following pioneering work carried out by Oldendorf and Cormack.

FIGURE 5: CT image with beam hardening artefacts

In order to have enough data to mathematically reconstruct virtual slices, one needs projections from different angles. Two angles allow the reconstruction of objects as squares. This is of course not satisfactory. As a rule of thumb, the quality of the reconstruction (shape, intensity...) and resolution in an image increases with the number of projections. However, for a fixed total acquisition time, the noise in each projection increases also with this number. Some optimum has to be found between resolution and noise. In today's CT scanners, thousands of fixed solid state scintillator detectors span a 2p arc, while a X-ray tube rotates at high speed (up to 1 revolution/s) over a full circle around the patient. Tomographic images are then reconstructed by means of analytical or iterative reconstruction algorithms.

As for projection radiography, a drawback of CT is the radiation burden to the patient, especially for young children. It is expected that the future switch from integrating detectors to counting detectors will allow a drastic reduction in patient dose for equivalent image quality, thus eliminating this burden.

The use of radioactive tracers that are introduced in the living system to study its metabolism dates from 1923 when de Hevesy and Paneth studied the transport of radioactive lead in plants. In 1935, de Hevesy and Chiewitz were the first to apply the method to the study of the distribution of a radiotracer (P-32) in rats.

The major development of nuclear imaging (also called scintigraphic imaging) started with the invention of the gamma camera by Anger in 1956. In parallel, positron imaging was developed. Both imaging modalities are now standard in the major nuclear medicine departments.

The tracer principle, which forms the basis of nuclear imaging, is the following: a radioactive biologically active substance is chosen in such a way that its spatial and temporal distribution in the body reflects a particular body function or metabolism. In order to study the distribution without disturbing the body function, only traces of the substance are administered to the patient.

The radiotracer decays by emitting gamma rays or positrons (followed by annihilation gamma rays).The distribution of the radioactive tracer is inferred from the detected gamma rays and mapped as a function of time and/or space.

The most often used radio-nuclides are Tc-99m in 'single photon' imaging and F-18 in 'positron' imaging.

Tc-99m is the decay daughter of Mo-99 which itself is a fission product of U. The half-life of Tc-99m is 6h, which is optimal for most metabolic studies but too short to allow for shelf storage. Mo-99 has a half-life of 65h. This allows a Mo-99 generator (a 'cow') to be stored and Tc-99m to be 'milked' when required. Tc-99m decays to Tc-99 by emitting a gamma ray with an energy output of 14O keV. This energy is optimal for detection by scintillator detectors. Tc-99 itself has a half-life of 211100 years and is therefore a negligible burden to the patient.

F-18 is cyclotron produced and has a half-life of 110 minutes. It decays to stable O-18 by emitting a positron. The positron loses its kinetic energy through Coulomb interactions with surrounding nuclei. When it is nearly at rest, which in tissue occurs after an average range of less than 1 mm, the probability of a collision with an electron greatly increases and becomes one. During the collision matter-antimatter annihilation occurs in which the rest mass of the electron and the positron is transformed into two gamma rays of 511 keV. The two gamma rays originate at exactly the same time (they are “coincident”) and leave the point of collision in almost opposite directions.

Because the source of the rays is no longer a point source, but distributed through the object, adapted 'optics' have to be used for image formation. There is no known material which refracts gamma rays the way that lenses do with visible light. One, therefore, has to rely on selective absorption of the rays based on geometrical criteria. The first, historical method but still used for particular applications, is based on the 'camera obscura' principle: a lead cone is placed over the detector and a pin-hole opening is made at top of the cone, perpendicular to the centre of the detector surface.

Only those rays which pass through the pin-hole form an image on the detector. The image is inverted and enlarged or reduced with respect to the object, depending on the distances between object, pin-hole and detector. The second method is based on the multiple hole collimator: a thick lead or tungsten sheet in which thousands of parallel holes are drilled (other manufacturing techniques exist). Typical hole sizes are a couple of cm in length with a diameter of a couple of mm. The collimator structure is an inherent limitation to the ultimate camera resolution. Furthermore, its geometric efficiency is very low (e.g. 10-4).

Only those rays that hit the detector through the holes in parallel contribute to the image, which then corresponds to a one to one mapping of the radioactive distribution.

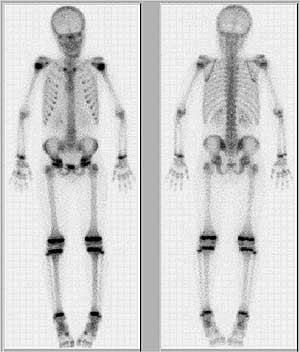

FIGURE 6: Bone scan, depicting bone metabolism in young patient.

In the Anger gamma camera, a large (e.g. 40x60x1 cm) NaI mono-crystal is used as the scintillation detector. The scintillations are detected by an array of about 100 photomultipliers. The distribution among the photomultipliers of the detected scintillation photons allows the place of detection on the crystal to be determined with a resolution of a few millimetres. The total number of detected photons allows their energy to be determined with a precision between 10 and 15%: the energy resolution.

In standard nuclear medicine practice, images are acquired during seconds to minutes. The spatial resolution of the images is between 0.5 and 1.5 cm and the contrast resolution is rather low. This is in part due to the fact that the images are projection images.

Although the number of photons per pixel may become extremely small, it may be of use to acquire series of images to study the dynamics of large areas in the image. The averaging effect over a large number of pixels, a 'region of interest', then compensates for the short acquisition time. An example of this is the use of nuclear imaging for the study of the heart function, in which a series of 8 to 16 images, representing one cardiac cycle, is acquired. Using specific processing techniques, such as temporal Fourier filtering, important clinical information can be retrieved.

By rotating the gamma camera around the patient and acquiring a large set of projections, enough data become available to reconstruct tomographic emission images. Kuhl developed emission tomography in 1964.

In tomographic imaging, the spatial resolution of the images is similar to planar imaging, but lesion contrast and, therefore, also detectability, is greatly improved.

FIGURE 7: Tomographic image of myocardial perfusion defect at exercise

In PET, the administered radio-nuclide decays due to the emission of a positron which in turn collides with an electron and is annihilated. In the process, two 511 keV gamma rays originate simultaneously and leave the annihilation site in opposite directions. Positron imaging was introduced by Brownell in 1951. Current ring PET cameras take advantage of the annihilation characteristics. A ring of scintillation detectors surrounds the patient. If two events are detected simultaneously in two opposed detectors, one assumes that an annihilation occurred somewhere on an imaginary line connecting the two detectors. By acquiring a large number of lines, e.g. 106, tomographic reconstruction methods can be used to reconstruct images of the tracer distribution.

The detectors used are scintillating detectors. Their stopping power should be high enough for 511 keV photons. Therefore, the detectors should be made out of high Z material and have a large enough detection volume. This last point however will reduce the precision of the localisation, as a precise spatial localisation requires small detectors. Furthermore, scattered rays should be rejected as they will generate lines that do not reflect the location of the annihilation. This requires a good energy resolution, which in turn requires large crystals. Finally, coincident detection implies a precise timing of events. The timing using scintillators depends on the temporal characteristics of the light generation in the detector. Therefore, finite coincidence time windows are set in order to accommodate for the detector response. This inevitably will lead to 'random' coincidences, in which two unrelated events are falsely attributed to the same annihilation. Blurring, scattered events and random events will therefore degrade the data sets. Current research is directed towards improving detector characteristics, geometrical configurations and reconstruction algorithms in order to improve the final image quality.

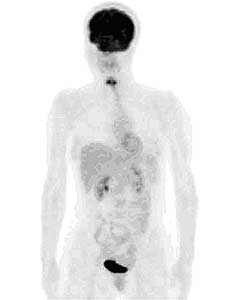

FIGURE 8: PET image of 18-FDG (DeoxyGlucose) metabolism

State-of-the-art clinical cameras have a spatial resolution of a few millimetres, which approaches the optimum given natural patient movements during acquisition times of the order of minutes. Small animal scanners reach the fundamental limit due to the positron range.

PET plays a major role in our understanding of biological processes at the molecular level.

Different imaging modalities generate images that correspond to different characteristics of the body or to different geometrical maps. They pose different short or long-term risks or concerns to the patient, the personnel and the working environment. The investment and running costs of the modalities differ, as do their availability.

The choice of an imaging technique is based on a balanced evaluation of the above stated factors. More than anything else, however, the following question should first be asked and answered: If the outcome of the examination is positive or negative, will it change the diagnostic or therapeutic pathway for the patient? If not, the examination should not be done.

![]()

© European Nuclear Society, 2006